Auditory cortex: physiology

Authors: Pablo Gil-Loyzaga

Contributors: Rémy Pujol, Sam Irving

The anatomical and functional characteristics of the human auditory cortex are very complex, and many questions still remain about the integration of auditory information at this level.

History

The first studies linking the structure and function of the cerebral cortex of the temporal lobe with auditory perception and speech were carried out by Paul Broca (1824-1880) and Carl Wernicke (1848-1904). Descriptions of Broca’s aphasia (speech disturbance caused by a lesion in Brodman’s areas 44 and 45 -also now known as Broca’s area) and Wernicke’s aphasia (a distruption in speech perception caused by damage to Brodman’s area 22) enabled the location of hearing and speech processing to be determined within cerebral cortex.

Function of the Auditory Cortex

Classically, two main functional regions have been described in auditory cortex:

- Primary auditory cortex (AI), composed of neurons involved in decoding the cochleotopic and tonotopic spatial representation of a stimulus.

- Secondary auditory cortex (AII), which doesn’t have clear tonotopic organisation but has an important role in sound localisation and analysis of complex sounds: in particular for specific animal vocalisations and human language. It also has a role in auditory memory.

- The belt region, surrounding AI and AII, which helps to integrate hearing with other sensory systems.

Function of primary auditory cortex

In AI, neurons are selective for particular frequencies and are arranged in isofrequency bands that are tonotopically organised. The precise spatial distribution of the isofrequency bands is related to the organisation of the auditory receptors. Their activity depends upon the characteristics of the stimulus: frequency, intensity and position of the sound source in space. Functionally, this region is strongly influenced by the waking state of the subject. A number of very specific neurons in AI are also involved in the analysis of complex sounds.

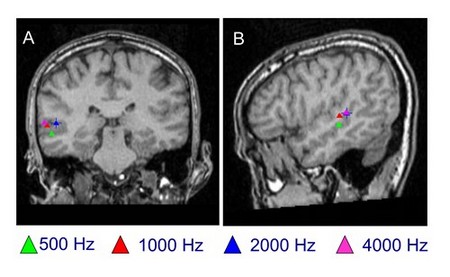

New techniques for studying the cerebral cortex (functional magnetic resonance imaging: fMRI; positron emission tomography: PET; and magnetoencephalography: MEG) suggest that the frequency distribution seen in animals (with traditional experimental methods) does not correspond exactly to that seen in humans, although they all have isofrequency bands, as seen using MEG below. fMRI in humans suggests that low frequencies are encoded in the superficial posterolateral regions of the sylvian fissure, whereas high frequencies are located in the deeper and anteromedial regions. It is important to note, however, that a degree of variation exists between individuals.

Magnetoencephalography (MEG): localisation of pure tones in a normal hearing subject

Localisation of pure tones (500 Hz, 1000 Hz, 2000 Hz and 4000 Hz) in frontal (A) and lateral (B) planes.

Image P. Gil-Loyzaga, Centre MEG de l’Université Complutense (Madrid).

Temporal integration of auditory stimuli

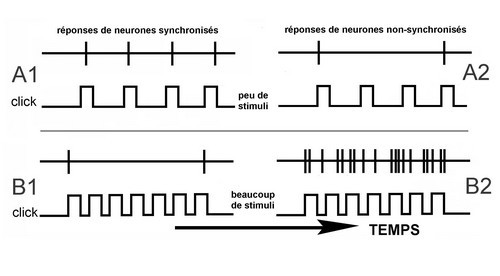

When awake, humans, like other animals, are able to perceive the small temporal variations of complex sounds. These variations are essential to the comprehension of human speech. A number of studies investigating AI have identified that in awake primates, two distinct populations of synchronous and asynchronous neurons (respectively) encode sequential stimuli differently.

- Synchronous neurons analyse slow temporal changes. They respond precisely to low-rate stimulation (A1), but are unable to maintain their activity if the number of stimuli increases. The fast changes in rate are perceived by these neurons as a continuous tone. They are involved in both frequency and intensity analysis.

- Asynchronous neurons analyse fast temporal changes (of many stimuli). They can determine short-duration variations and can accurately distinguish one stimulus from the next.

The functional division of the auditory cortex enables temporal variations of a stimulus to be decoded extremely accurately compared to other centers of the auditory pathway. It allows more information to be obtained about complex sounds, as well as the location of a sound source and its motion.

Figure: Reponses des neurones synchronises –> Responses in synchronous cells

Reponses des neurones non-synchronises –> Responses in asynchronous cells

Peu de stimuli –> few stimuli

Beaucoup de stimuli –> many stimuli

Temps –> Time

Synchronous and asynchronous neurons

- Synchronous neurons always respond to each stimulus (click) when the stimulus trains have intervals greater than 20 ms (A1). As the intertrain interval decreases (i.e. the repetition rate gets faster), these neurons start to desychronise their firing rate. When the interstimulus interval falls below 10 ms (B1), these neurons only fire at the beginning and the end of the stimulus (onset and offset responses, respectively).

- Asynchronous neurons do not respond synchronously to stimuli (A2 and B2), but their activity increases progressively to a very high discharge rate (B2).

Spectral integration of auditory stimuli

Animal vocalisations and human language vary greatly between individuals. Voluntary and involuntary variations also exist within the same subject. Although the perception of auditory messages requires analysis of the frequencies that make up a complex sound, spectral analysis is even more important.

If the sound spectrum containing the entire soundwave profile of a complex sound (the sound envelope) is maintained, good hearing and phoneme comprehension can occur, even when certain specific frequencies are removed.

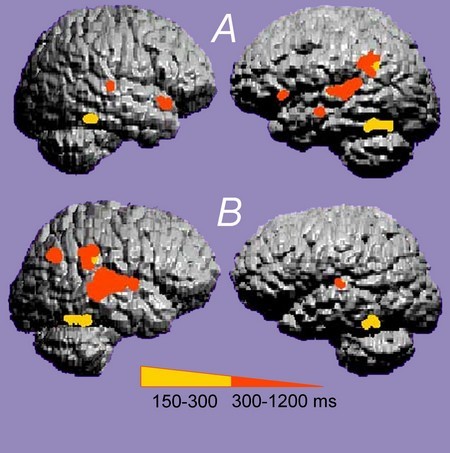

Non-invasive MEG imaging can be used to accurately determine with excellent spatial precision, the location of evoked activity that occurs in a few milliseconds. MEG is an appropriate technique to study complex auditory function, such as speech, as well as the potential functional effects of cortical damage.

MEG in a normal subject (A) and a dyslexic subject (B)

In normal-hearing subjects (A), specific linguistic cortical activation occurs mainly in the left auditory cortex. In dyslexic subjects (B), activation is more prominent in the right cortex and is more diffuse.

Image P. Gil-Loyzaga, Centre MEG de l’Université Complutense (Madrid)